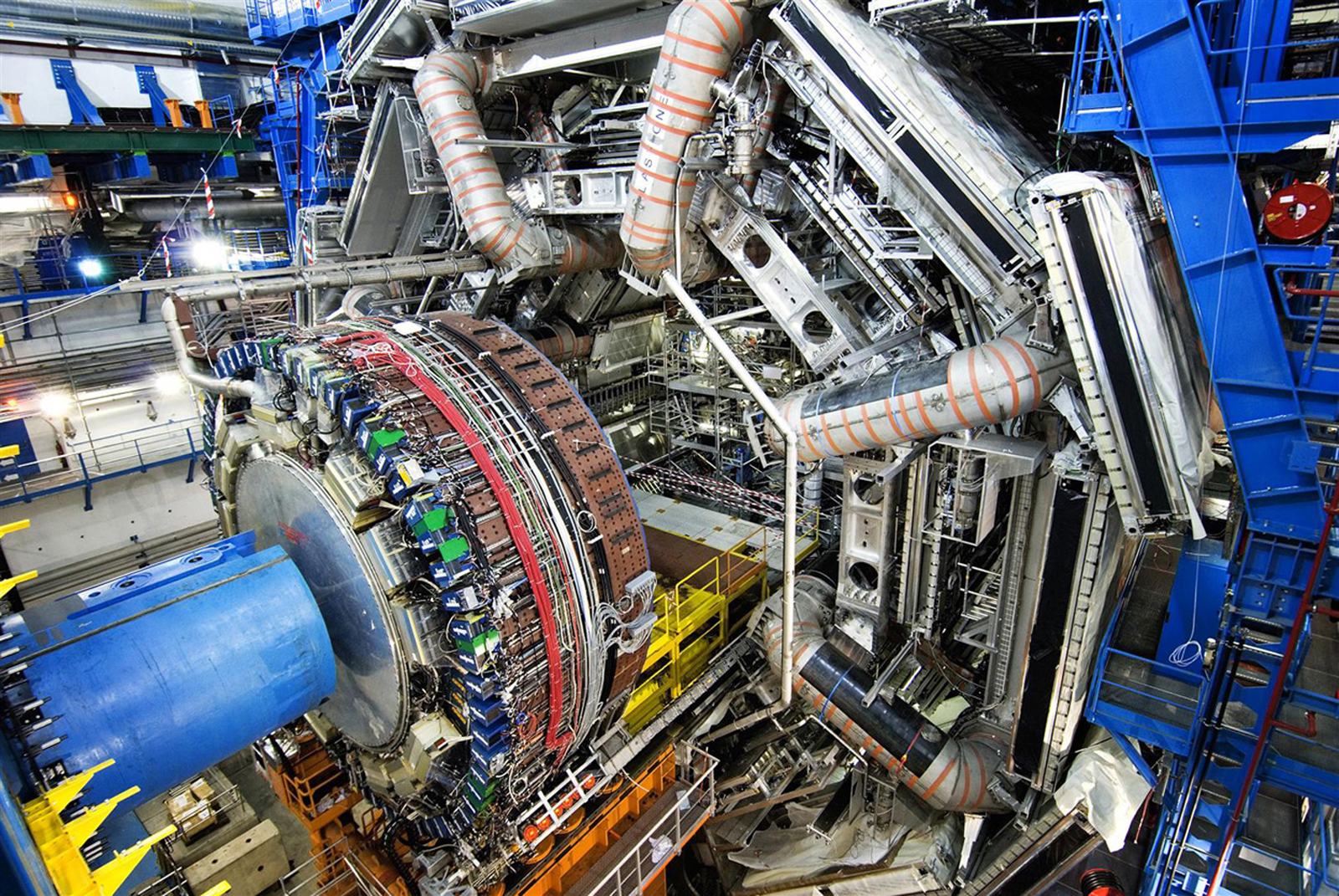

Taking a closer look at LHC

Taken from AI at CERN

CERN operates some of the most complex scientific machinery ever built, relying on intricate control systems and generating petabyte upon petabyte of research data. Operating this equipment and performing analysis on the data gathered are both intensive tasks. Therefore, CERN is increasingly looking to the broad domain of artificial-intelligence (AI) research to address some of the challenges encountered in dealing with data, particle beam handling, and in the up keep of its facilities.

The modern domain of artificial intelligence came into existence around the same time that CERN did, in the mid-’50s. It has different meanings in different contexts, and CERN is mainly interested in task-oriented, so-called restricted AI, rather than general AI involving aspects such as independent problem-solving, or even artificial consciousness. Particle physicists were among the first groups to use AI techniques in their work, adopting Machine Learning (ML) as far back as 1990. Beyond ML, physicists at CERN are also interested in the use of Deep Learning to analyse the data deluge from the LHC.

Dealing with a data deluge

Even before the Large Hadron Collider began colliding high-energy beams of protons in 2010, the particle-physics community began to collect unprecedented quantities of data. Particles collide within the LHC’s detectors up to 40 million times a second, each collision event generating about a megabyte of data: far too much to store without some filtering.

Not only do the scientists have to programme their data acquisition systems to select the right events for further analysis while discarding the uninteresting data, they also have to examine trillions of stored collision events looking for signatures of rare physics phenomena. They have therefore turned to one sub-domain of AI, called machine learning (ML), to improve the efficiency and efficacy of these tasks. In fact, the LHC’s four major collaborations ‑ ALICE, ATLAS, CMS and LHCb ‑ have formed the Inter-experimental Machine Learning (IML) Working Group to follow developing trends in ML.

Researchers are also collaborating with the wider data-science community to organise workshops to train the next generation of scientists in the use of these tools, and to produce original research in Deep Learning. ROOT, the software program developed by CERN and used by physicists around the world for analysing their data, also comes with machine-learning libraries.

Operating in extreme environments

Experimental facilities at CERN may be temporarily classified as high-radiation zones, preventing human intervention to perform repairs or to replace equipment. CERN has therefore developed autonomous robots to operate in these zones, which include the tunnel containing the LHC. The Engineering department at CERN, which builds and maintains these robots, uses AI techniques to help the robots navigate on their own and make decisions on what actions to take inside the radiation environments.

Machine Learning is also used in the CERN accelerator complex to predict and avoid equipment failures, as well as to optimize the quality of the high-energy beams of protons that CERN delivers to its experiments. Furthermore, physicists are also investigating how similar techniques could make the work of those who run accelerators more efficient, more reliable, and possibly even autonomous.

Bringing state-of-the-art techniques to CERN

In addition to using AI robotics for the maintenance of its complex machines, predicting component failure and for safety applications, CERN has recognised the importance of involving external AI expertise in projects undertaken at the laboratory.

Much of the collaboration with these experts is through CERN openlab, a public-private partnership that enables CERN to work with world-leading researchers and companies working on AI. CERN openlab has launched several machine-learning projects ranging from improving industrial control systems to simulating the conditions inside particle detectors following high-energy collisions to investigations of future Quantum Machine Learning (QML) algorithms. CERN openlab also contributes to the use of CERN’s AI resources for humanitarian operations and recently organised a conference on the ethics associated with our increasing use of AI in the world.

In addition, CERN is also interested in employing its AI knowhow to create positive impact in society as a whole. The CERN Knowledge Transfer Group works with players in the automotive, finance and pharmaceutical sectors towards this effort.

More:

ROOT: analyzing petabytes of data, scientifically.

IML: Inter-Experimental LHC Machine Learning Working Group

Machine learning could help reveal undiscovered particles within data from the Large Hadron Collider

Boosting particle accelerator efficiency with AI, machine learning and automation

|

AUTHORS Xabier Cid Vidal, PhD in experimental Particle Physics for Santiago University (USC). Research Fellow in experimental Particle Physics at CERN from January 2013 to Decembre 2015. He was until 2022 linked to the Department of Particle Physics of the USC as a "Juan de La Cierva", "Ramon y Cajal" fellow (Spanish Postdoctoral Senior Grants), and Associate Professor. Since 2023 is Senior Lecturer in that Department.(ORCID). Ramon Cid Manzano, until his retirement in 2020 was secondary school Physics Teacher at IES de SAR (Santiago - Spain), and part-time Lecturer (Profesor Asociado) in Faculty of Education at the University of Santiago (Spain). He has a Degree in Physics and a Degree in Chemistry, and he is PhD for Santiago University (USC) (ORCID). |

CERN CERN Experimental Physics Department CERN and the Environment |

LHC |

IMPORTANT NOTICE

For the bibliography used when writing this Section please go to the References Section

© Xabier Cid Vidal & Ramon Cid - rcid@lhc-closer.es | SANTIAGO (SPAIN) |